BOM there it is

Published on Aug 20, 2019

Came across an interesting behavior the other day and thought I'll share my frustration, maybe someone else runs into the same issue.

I received a report as an Excel (.xlsx) file that I had to import into our system. First step is usually to convert it to a CSV file and feed it into our importer—a service written in Go. The Go libraries for Excel files are not very nice to say the least so CSV is the way to go. I converted it by using Microsoft Excel for Mac. After running the import I saw that it was silently discarding the first column of the imported file.

I build a quick example to replicate the problem and figure out if it's related to the CSV library we are using: gocarina/gocsv. The neat feature of the library is that you can just unmarshal into a struct without using a loop, check for EOF and other error cases. It behaves more like the json package of the standard library.

package main

import (

"encoding/csv"

"fmt"

"io"

"os"

"github.com/gocarina/gocsv"

)

// Row is a row with columns

type Row struct {

One string `csv:"One"`

Two string `csv:"Two"`

Three string `csv:"Three"`

}

func main() {

cf, err := os.OpenFile(os.Getenv("FILEPATH"), os.O_RDWR|os.O_CREATE, os.ModePerm)

if err != nil {

panic(err)

}

defer cf.Close()

// Use ; as separator as that's what Excel gives us

gocsv.SetCSVReader(func(in io.Reader) gocsv.CSVReader {

r := csv.NewReader(in)

r.Comma = ';'

return r

})

var rows []Row

if err := gocsv.UnmarshalFile(cf, &rows); err != nil {

fmt.Println(err)

}

for _, row := range rows {

fmt.Println("row", row)

}

}

Even with the minimal example the first column never got unmarshaled into the struct. To narrow down it's not a problem with the library I quickly built a second example using smartystreets/scanners which also didn't work.

Note: Now when I tried to replicate it at home I couldn't actually replicate it and smartystreets/scanners worked using this minimal example. I'm not sure why it didn't work when I tried it the first time, maybe I was using an older version. For completeness sake this is the code I'm using now that works.

package main

import (

"fmt"

"log"

"os"

"github.com/smartystreets/scanners/csv"

)

// Row is a row with columns

type Row struct {

One string `csv:"One"`

Two string `csv:"Two"`

Three string `csv:"Three"`

}

func main() {

cf, err := os.OpenFile(os.Getenv("FILEPATH"), os.O_RDWR|os.O_CREATE, os.ModePerm)

if err != nil {

panic(err)

}

defer cf.Close()

scanner := csv.NewScanner(cf,

csv.Comma(';'), csv.Comment('#'), csv.ContinueOnError(true))

for scanner.Scan() {

if err := scanner.Error(); err != nil {

log.Panic(err)

} else {

fmt.Println(scanner.Record())

}

}

}

After seeing that both libraries (at least at the time of the investigation) failed on picking up the first column I realized that it's probably a problem with the file itself.

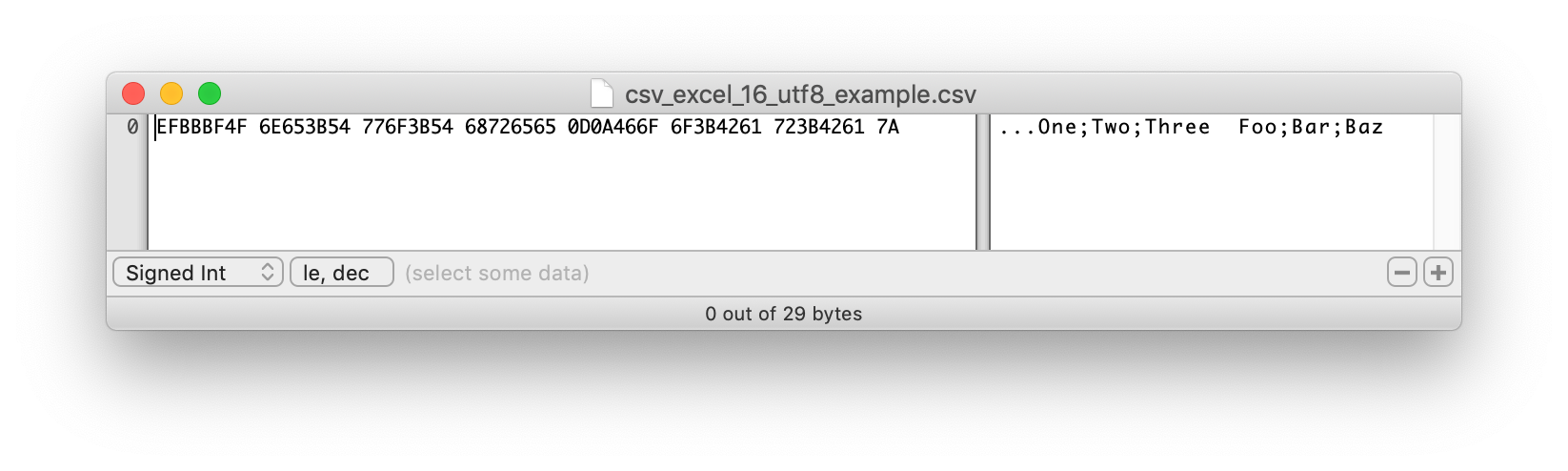

I ran a file from a previous report through the importer and it worked. At that point it was clear that it's a problem with the actual file and not the importer. I did what I should've done at the beginning and looked at the raw file with Hex Fiend which is one of my favorite tools and an incredible addition to everyone's toolbox.

After comparing the Hex representation of the working and non-working file it was very clear what was happening.

The non-working file was prepended with three invisible characters: ... which upon closer inspection is EFBBBF.

If you know a thing or two about encodings you know that's the BOM (Byte Order Mark) and that Go doesn't like it (”We don't like BOMs.” - bradfitz on the Go issue tracker).

Wikipedia says:

The byte order mark (BOM) is a Unicode character, U+FEFF BYTE ORDER MARK (BOM), whose appearance as a magic number at the start of a text stream can signal several things to a program reading the text:[1]

The byte order, or endianness, of the text stream; The fact that the text stream's encoding is Unicode, to a high level of confidence; Which Unicode encoding the text stream is encoded as.

As it turns out Microsoft adds these if you save a CSV from Excel.

Microsoft compilers and interpreters, and many pieces of software on Microsoft Windows such as Notepad treat the BOM as a required magic number rather than use heuristics. These tools add a BOM when saving text as UTF-8, and cannot interpret UTF-8 unless the BOM is present or the file contains only ASCII. Google Docs also adds a BOM when converting a document to a plain text file for download.

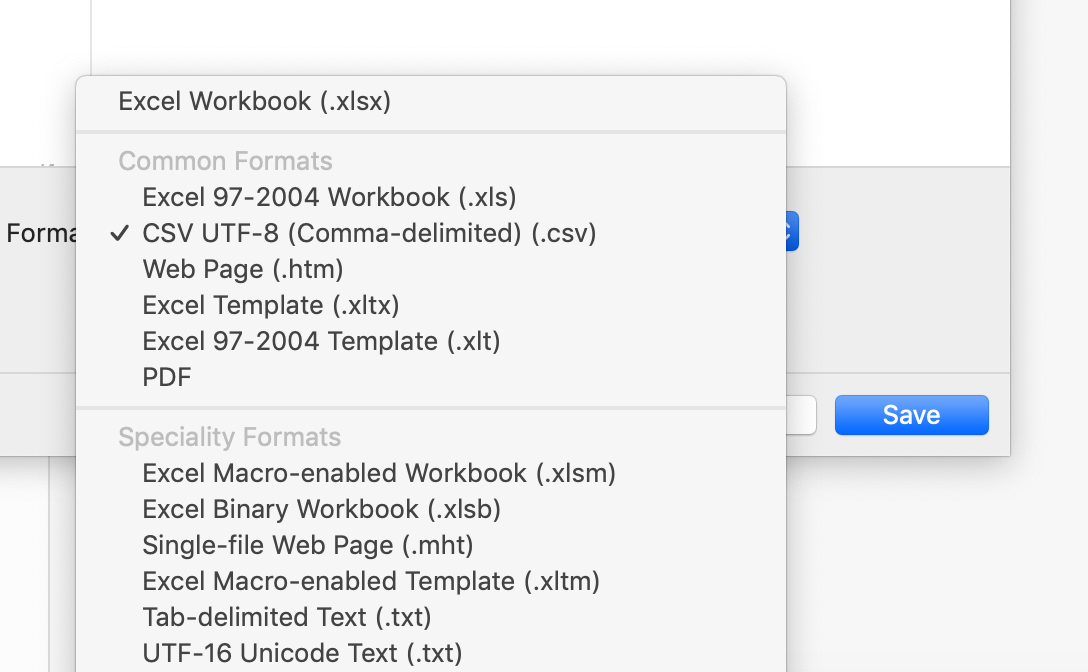

On top of that: This behavior is different between Microsoft Excel for Mac: Version 15.12.3 and Microsoft Excel for Mac: Version 16.28. I tried to replicate the issue on Version 15 at first, exported the CSV and the BOM control characters weren't inserted. I then upgraded and saw that they added the UTF-8 CSV option additionally to the "normal" CSV export which is now buried all the way at the bottom of the save dialog (Not even pictured in this screenshot) and the default one is UTF-8 with the additional BOM characters.

Example to replicate

I created 3 files, two from Excel Version 16 with the UTF-8 and the "normal" export. One from the only available CSV export in Excel Version 15 where you can easily spot the difference.

(image: screenshot-2019-08-20-at-22.14.56.png" caption="csv_excel_16_utf8_example.csv" >}}

(image: screenshot-2019-08-20-at-22.14.58.png" caption="csv_excel_16_example.csv" >}}

(image: screenshot-2019-08-20-at-22.15.00.png" caption="csv_excel_15_example.csv" >}}

Running the Go importer with gocsv and the three example files, the first column is missing in the example using the UTF-8 version of the file.

csv|master⚡ ⇒ FILEPATH=csv_excel_15_example.csv go run csv.go

row {Foo Bar Baz}

csv|master⚡ ⇒ FILEPATH=csv_excel_16_example.csv go run csv.go

row {Foo Bar Baz}

csv|master⚡ ⇒ FILEPATH=csv_excel_16_utf8_example.csv go run csv.go

row { Bar Baz}

After removing the BOM characters and running the importer everything worked as expected:

csv|master⚡ ⇒ FILEPATH=csv_excel_15_example.csv go run csv.go

row {Foo Bar Baz}

csv|master⚡ ⇒ FILEPATH=csv_excel_16_example.csv go run csv.go

row {Foo Bar Baz}

csv|master⚡ ⇒ FILEPATH=csv_excel_16_utf8_example.csv go run csv.go

row {Foo Bar Baz}

Workaround / Hack

If you can't touch the file, do a properly encoded export or can switch to a different CSV library you could also use this dimchansky/utfbom library to remove encoding information after parsing the file.

o, err := ioutil.ReadAll(utfbom.SkipOnly(bufio.NewReader(cf)))

if err != nil {

fmt.Println(err)

return

}

It's always the “Schei? Encoding” in the end isn't it?